I suspect I’m not the only researcher who pays at least a little attention to bibliometrics. For those not in the know, I’m talking about things like

Number of papers published

Number of citations of those aforementioned papers

Number of citations per paper

h-index. Named after yours truly, the h index is the number such that you have published at least h papers with at least h citations each.

As I’ve alluded to, bibliometrics form part of the case made in applications for grants, promotions, new jobs and other scarce resources. They provide a transparent and objective (stop laughing) measure of the performance of an academic, allowing decision makers to benchmark them against each other. It must all seem very weird for those not in academia. Unsurprisingly, there is a well established countermovement to bibliometrics that is starting to gain ground. Also unsurprisingly, alternative metrics are starting to pop up, ones that claim to go beyond the above ‘traditional’ metrics.

We’re going to forget all that for a moment though, and take a look at what the machine says. The machine, in this case is Scopus, a journal citation database owned by Elsevier, the Dutch publishing behemoth. According to a difficult to access MIT Library page dated January 2022,

Elsevier is one of the largest publishers of scholarly journals in the world, publishing more than 2,600 titles. Other large publishers are Taylor & Francis, Springer, and Wiley. RELX, the parent company of Elsevier, had revenues of US $9.8 billion and profit margins of 31.3% in 2019 (Elsevier’s profits account for about 34% of RELX’s total profits).

I am a frequent user of Scopus. I mostly use it to hunt down publications (you can set up keyword e-alerts), but there’s a bunch of other stuff you can do. When I’m applying for grants I can easily get a snapshot of my latest metrics to whack into an updated CV. If I’m interested in the influence of someone’s paper, I can use Scopus to show me all the other papers that have cited it. If I come across an interesting author, I can see what else they’ve published. And when you’re feeling depressed, it’s a great reminder of how far you are behind your peers.

So let’s get to what Scopus says about wildfire and climate change. Or “wildfire AND climate change”, to be precise. The standard search lets you scan abstracts, article titles and so on, but I’m going to start with the pilot Researcher Discovery feature. According to Scopus, “This pilot can help you find and connect with researchers from around the globe.” So let’s find and connect with future fire researchers around the globe!

We’ll limit results to the top 50 entries, sorted by number of ‘matching’ documents since 2017. First up is Professor John Abatzoglou from UC Merced, with 28 matching documents during this period. This may seem like quite a lot of papers to publish over a six year period but I can assure you, it’s a radical underestimate. Abatzoglou published almost 140 papers from 2017 to 2022, it’s just that Scopus only classed 28 of them as being about climate change and wildfire.

(For comparison, I published a total of 23 papers between 2017 and 2022, including one in 2017 and none (0) in 2018. I am pretty damn chuffed to be #38 on this list, with 9 matching documents.)

Rounding out the top five are Professor Matthew Hurteau of the University of New Mexico (23 publications), Professor Mike Flannigan from Thomson Rivers University (21), Professor Robert Scheller from North Carolina State University (18) and Associate Professor Crystal Kolden also of UC Merced (17; clearly due for a promotion).

Grant Williamson (#7) and David Lindenmayer (#8) are the first Aussies on the list, followed by my boss Trent Penman (#10, 15 publications on climate change and wildfire from 2017 to 2022). It’s a very North America and Australia-centric list (48 of 50 entries, ther other two being Spain and France).

Let’s duck back to the main page. Expanding our search to the article title, abstract and keywords, there are over three and a half thousand publications that match “climate change” AND wildfire. No wonder I feel so out of touch with the literature. The number of publications is accelerating. There were 656 in 2022 alone. We only have to go back to 2010 to find a year with less publications than we have had so far in just 6 weeks of 2023.

Meanwhile, prior to 2004 (which had a measly 21 climate change + wildfire papers), we never had more than 6 papers on the topic in a given year. There weren’t any at all in 1994, and before 1988 the trace of future fire disappears.

This set of search results also provides some coarse subject areas to stuff these 3,667 papers in: About 2,000 in environmental science, 1,200 in agricultural and biological sciences, 900 in earth and planetary sciences, and 400 each in social sciences and medicine. I am proud to say that I have published in more or less all of these.

Let’s round it out by sorting the full list in order of times cited. The most cited paper in the field of wildfire and climate change is Allen et al. (2010) A global overview of drought and heat-induced tree mortality reveals emerging climate change risks for forests, published in Forest Ecology and Management. It’s been cited almost 5,000 times! While it’s an important paper, I’m not sure I would classify it as being primarily about climate change and wildfire. The second paper in this list clearly is though, it’s Westerling et al.’s 2006 paper on warming and an earlier spring increase in Western U.S. fire activity, with a cool three and a half thousand citations (perhaps each climate change and wildfire paper cited it once).

There are eight papers with over 1,000 citations and 103 with over 200. For reference my most cited paper in this list is a paper I wrote back in 2013 for my PhD with my old environment department boss Pete Smith and Chris Lucas from the Bureau of Meteorology, about trends in the Australian Forest Fire Danger Index. It’s been cited 117 times. For some reason a paper I contributed to (led by Professor Nerilie Abram of ANU) on climate variability, climate change and wildfire did not make it onto this list, even though a big chunk of it is definitely about climate change. It’s had 178 citations and was only published two years ago.

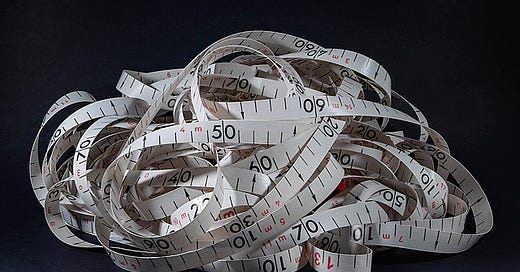

Having these metrics at your fingertips is double or even triple-edged. They provide the illusion that we can easily measure scientific output and quality. There are enough of them that you can always find one or two to put you in the best possible light for whatever it is you’re applying for right now. But as the number of papers (and metrics (and alternative metrics)) grows, it becomes increasingly difficult to find anything to grasp onto. One moment you’re carefully tracking something solid and discrete, the next you’re being washed down a pipe and dumped out in the open ocean, begging for a lifesaver.

To end on a happier note, one nice feature of the Alphabet company equivalent of Scopus is that you can look at the metrics for a research group, instead of an individual. The group I joined last year is called FLARE Wildfire Research and apart from being a friendly, supportive and cool bunch of people, they’re rather prolific. In the last five years us FLAREonauts have collectively been cited over 8,000 times and have an h-index of 43.

That’s gotta count for something, right?

This may not have been your intention but I read this post as use of data to articulate the conundrum of assessing which papers contribute valuable information vs. the 'game' of academia.

"But as the number of papers (and metrics (and alternative metrics)) grows, it becomes increasingly difficult to find anything to grasp onto." So many times I've been lost down rabbit holes of paper searching, wading through rivers of studies on minute details to find something useful.

I reckon this is one of the curses of the 'new knowledge or die' mentality in Academia. New research should rightly focus on new knowledge but I often wonder about the questions that academics ask themselves when choosing research topics. e.g. Does the world need this? Is this advancing society's understanding of the world around us? Is this a self-indulgent niche that I've convinced myself is valuable but is really just a topic I'm obsessive about and don't want to leave my comfort zone?

To go a bit existential - Do researchers have an obligation to recognise their privileged position of being able to think all day for a living and focus their new research on where they could create the greatest impact? AND/OR Is the pursuit of any new knowledge through the scientific method valuable because, even though the research may not be profound in itself, it may lead a researcher or practitioner to genuinely impactful new knowledge in the future.